Family files lawsuit saying Roblox, Discord failed to protect 10-year-old daughter from being abducted

SAN MATEO, California (Gray News) – A family in California is suing Roblox and Discord after they say the online platforms failed to protect their 10-year-old daughter from being abducted by a predator.

The lawsuit was filed by law firm Anapol Weiss last week.

According to a news release, this is the seventh such lawsuit brought by the law firm against Roblox, which the firm says underscores “a pattern of systemic negligence and failure to protect child users.”

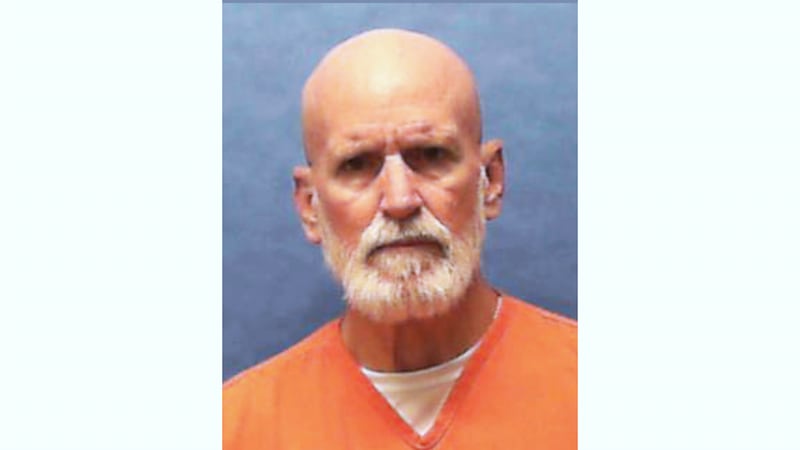

According to the lawsuit, 27-year-old Matthew Macatuno Naval posed as a peer to contact the girl through Roblox. Then, the conversation moved to Discord, where the child was allegedly “groomed and manipulated” into sharing her home address.

The lawsuit says that on April 13, Naval abducted the girl from her home in Taft, California, about two hours north of Los Angeles. She was eventually found hundreds of miles away in his vehicle in Elk Grove, after a frantic, multi-agency search, according to the suit.

“Despite aggressively marketing themselves as family-friendly and safe for kids, Roblox and Discord are both accused of turning a blind eye to rampant abuse in the name of user growth and profits,” the news release reads.

Roblox and Discord are accused of allowing “adult strangers to communicate directly with children; failing to verify age or identity; and providing features that predators use to isolate and manipulate minors.”

“This case is horrifying, but it’s the natural consequence of how these platforms choose to operate,” said Alexandra Walsh, partner at Anapol Weiss, in a statement. “Roblox and Discord know predators are exploiting children on their platforms. They’ve known for years, and for years refused to implement simple and essential protections. This wasn’t a loophole. It was the system working exactly as they designed it.”

The lawsuit names both Roblox Corporation and Discord, Inc., as defendants.

The complaint includes claims of negligence, negligent design, failure to warn, and design defect, alleging that both companies knew their platforms were being exploited by adult predators, but chose not to act.

Roblox has an in-depth safety guide for parents and users, which reads, “Safety is foundational to everything we do. We’re building a platform with industry-leading safety and civility features and continuously evolve our platform as our community grows.”

According to a news release from Roblox about its partnership with law enforcement, every day, more than 100 million users of all ages have a “safe and positive experience” on Roblox.

The company said it continually innovates its extensive safety systems, which combine AI with the expertise of thousands of trained professionals.

“When these systems detect early signs of possible illicit activity, violent threats, or other real-life harms, we investigate and, where warranted, take appropriate enforcement action, including reporting them to law enforcement,” Roblox said in the news release.

Roblox said it submitted 24,522 reports to the National Center for Missing and Exploited Children in 2024.

In comparison, data shows that Discord submitted 241,354 reports, Facebook submitted over 8.5 million, Instagram over 3.3 million, TikTok over 1.3 million and Snapchat over 1.1 million.

Copyright 2025 Gray Local Media, Inc. All rights reserved.